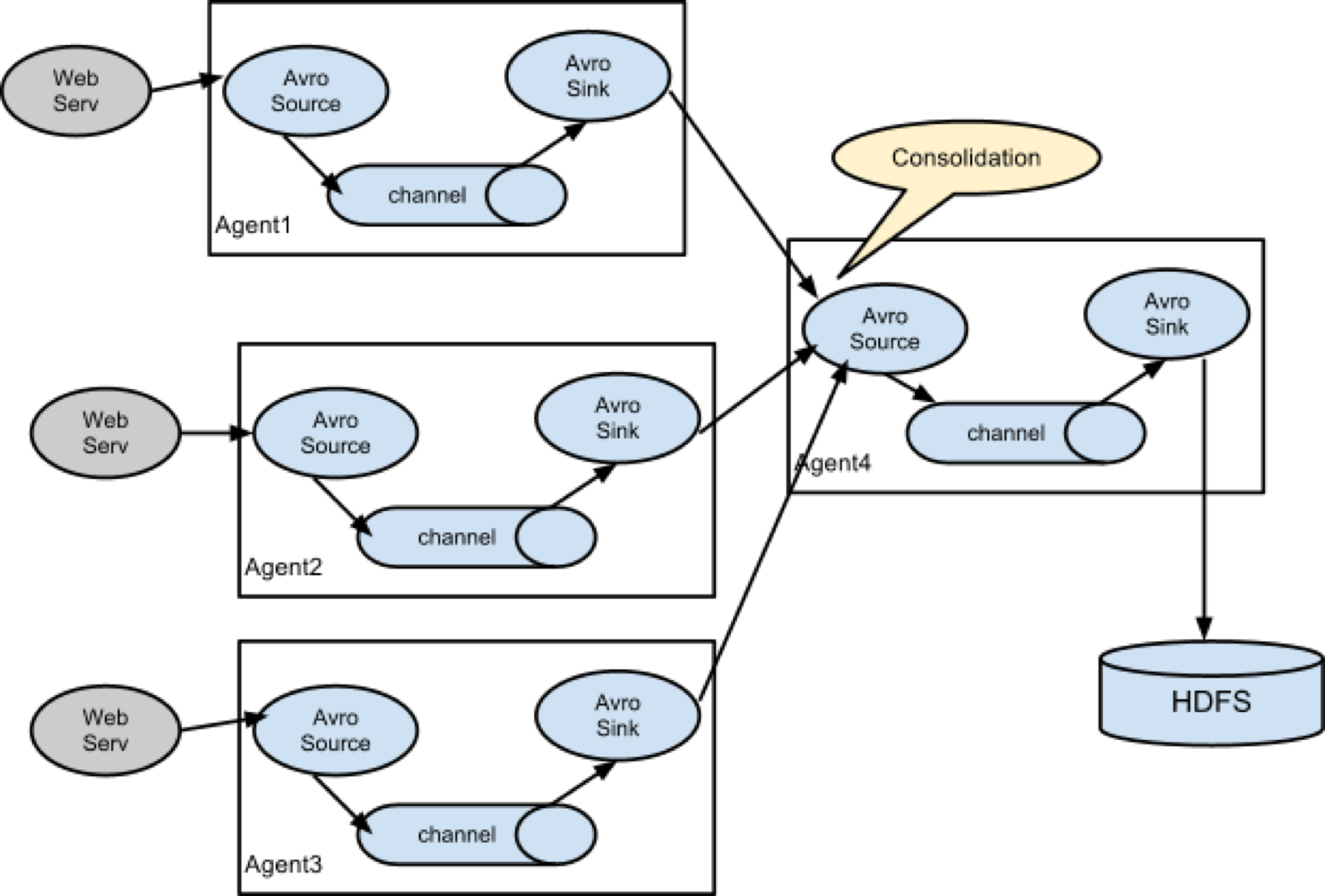

3.6 多数据源汇总案例

多 Source 汇总数据到单 Flume

3.6.1 案例需求

hadoop201 上的 Flume-1 监控某一个端口的数据流,

hadoop202 上的 Flume-2 监控文件/opt/module/group.log,

Flume-1 与Flume-2 将数据发送给 hadoop203 上的 Flume-3,Flume-3 将最终数据打印到控制台。

3.6.2 案例分析

3.6.3 操作步骤

步骤1: 创建目录group3, 并分发 flume

在目录/opt/module/flume/job 目录下创建目录group3

把 flume 分发到其他两台设备上

步骤2:创建flume1-netcat-flume.conf

配置 Source 监控端口 44444 数据流,配置 Sink 数据到下一级 Flume: 在hadoop201上创建配置文件并打开

文件内容如下:

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = hadoop201

a1.sources.r1.port = 44444

# Describe the sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop203

a1.sinks.k1.port = 4141

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

步骤3:创建 flume2-logger-flume.conf

配置 Source 用于监控 /opt/module/group.log 文件,配置 Sink 输出数据到下一级 Flume。

在 hadoop202 上配置文件并打开

文件内容如下:

# Name the components on this agent

a2.sources = r1

a2.sinks = k1

a2.channels = c1

# Describe/configure the source

a2.sources.r1.type = exec

a2.sources.r1.command = tail -F /opt/module/group.log

a2.sources.r1.shell = /bin/bash -c

# Describe the sink

a2.sinks.k1.type = avro

a2.sinks.k1.hostname = hadoop203

a2.sinks.k1.port = 4141

# Describe the channel

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

步骤4:创建flume3-flume-logger.conf

配置 source 用于接收 flume1 与 flume2 发送过来的数据流,最终合并后 sink 到控制台。 在 hadoop204 上创建配置文件并打开

文件内容如下:

# Name the components on this agent

a3.sources = r1

a3.sinks = k1

a3.channels = c1

# Describe/configure the source

a3.sources.r1.type = avro

a3.sources.r1.bind = hadoop203

a3.sources.r1.port = 4141

# Describe the sink

# Describe the sink

a3.sinks.k1.type = logger

# Describe the channel

a3.channels.c1.type = memory

a3.channels.c1.capacity = 1000

a3.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r1.channels = c1

a3.sinks.k1.channel = c1

步骤5: 分别在 3 台设备启动 3 个 agent

flume-ng agent -c conf -f job/group3/flume1-netcat-flume.conf -n a1

bin/flume-ng agent -c conf -f job/group3/flume2-logger-flume.conf -n a2

bin/flume-ng agent -c conf -f job/group3/flume3-flume-logger.conf -n a3 -Dflume.root.logger=INFO,console

步骤6: 在 hadoop202 上向/opt/module目录下的group.log追加内容

步骤7:在 hadoop201 上向 44444 端口发送数据

telnet hadoop102 44444

步骤8: 查看 hadoop203 上控制台的显示